22 Min to complete

Introduction

In this lab, you will create a Kubernetes cluster using the Google Kubernetes Engine, and you will deploy the following ScyllaDB products using the ScyllaDB Operator:

- A three-node ScyllaDB cluster

- The ScyllaDB Manager

- The ScyllaDB Monitoring stack

- And finally, two simple applications using CQL and the DynamoDB API.

Sounds hard? Not when using Kubernetes, so let’s get to it.

prerequisites

- Make sure you have the Google Cloud SDK installed. It includes the gcloud command-line tool. To do so, follow the instructions.

- Make sure you have the account and project properties set.

- Make sure you have kubectl installed.

- Make sure Helm is installed.

- Ensure that you have enabled the Google Kubernetes Engine API.

Walkthrough

If you haven’t done so yet, start by downloading the example from git:

git clone https://github.com/scylladb/scylla-code-samples.git

Next, spin up a GKE cluster.

Set the GCP user and project environment variables based on your current gcloud configuration:

export GCP_USER=$(gcloud config list account --format "value(core.account)")

export GCP_PROJECT=$(gcloud config list project --format "value(core.project)")

Next, start the script to create a GKE cluster which you’ll use throughout this demo. This script creates the K8s cluster in two availability zones: us-west-1b and us-west-1c.

Each consists of:

- Three nodes (n1-standard-4) dedicated to ScyllaDB

- A single node (n1-standard-8) dedicated for utilities like Grafana, Prometheus, ScyllaDB Operator, etc.

Make sure you are in the directory: scylla-code-samples/kubernetes-university-live then:

./gke.sh --gcp-user "$GCP_USER" -p "$GCP_PROJECT" -c "university-live-demo"Next, install Cert Manager, which is a ScyllaDB Operator dependency.

As the name suggests, the Cert Manager is responsible for issuing TLS certificates. It’s a Kubernetes add-on that automates the management and issuance of certificates. The Operator Validating Webhook validates User-provided ScyllaDBCluster resources. On every creation and update of a ScyllaDBCluster resource, the Operator Validating Webhook is contacted by the K8s api-server to check whether it passes validation. Communication is done using the TLS protocol, and that’s why the Operator requires a certificate that the Cert Manager provides.

kubectl apply -f cert-manager.yaml To wait and see if the installation is complete, check whether Cert Manager Custom Resource Definitions (CRDs) enter an established state, which means every K8s api-server accepted them in the K8s cluster, and until all required Cert Manager Pods are ready to use:

kubectl wait --for condition=established crd/certificates.cert-manager.io crd/issuers.cert-manager.io

kubectl -n cert-manager rollout status --timeout=5m deployment.apps/cert-manager-cainjector

kubectl -n cert-manager rollout status --timeout=5m deployment.apps/cert-manager-webhook

Now, install the ScyllaDB Operator:

kubectl apply -f operator.yaml

Again, to make sure the installation is complete and ready to go, wait for the CRDs, and make sure all the ScyllaDB Operator Pods are ready:

kubectl wait --for condition=established crd/scyllaclusters.scylla.scylladb.com

kubectl wait --for condition=established crd/nodeconfigs.scylla.scylladb.com

kubectl wait --for condition=established crd/scyllaoperatorconfigs.scylla.scylladb.com

kubectl -n scylla-operator rollout status deployment.apps/scylla-operator

kubectl -n scylla-operator rollout status deployment.apps/webhook-server

Now, you’ll install the monitoring stack. It will give you observability into what is going on within the ScyllaDB cluster, whether nodes are healthy, and provide you with query statistics. Start by adding the Prometheus Community Helm repository to the local registry:

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

Then, update the local registry:

helm repo update

And install the monitoring stack (Grafana, Prometheus):

helm install monitoring prometheus-community/kube-prometheus-stack --values monitoring/values.yaml --values monitoring.yaml --create-namespace --namespace scylla-monitoring

Next, you’ll install the ScyllaDB Monitoring dashboards. Download and extract them:

wget https://github.com/scylladb/scylla-monitoring/archive/scylla-monitoring-3.6.0.tar.gz

tar -xvf scylla-monitoring-3.6.0.tar.gz

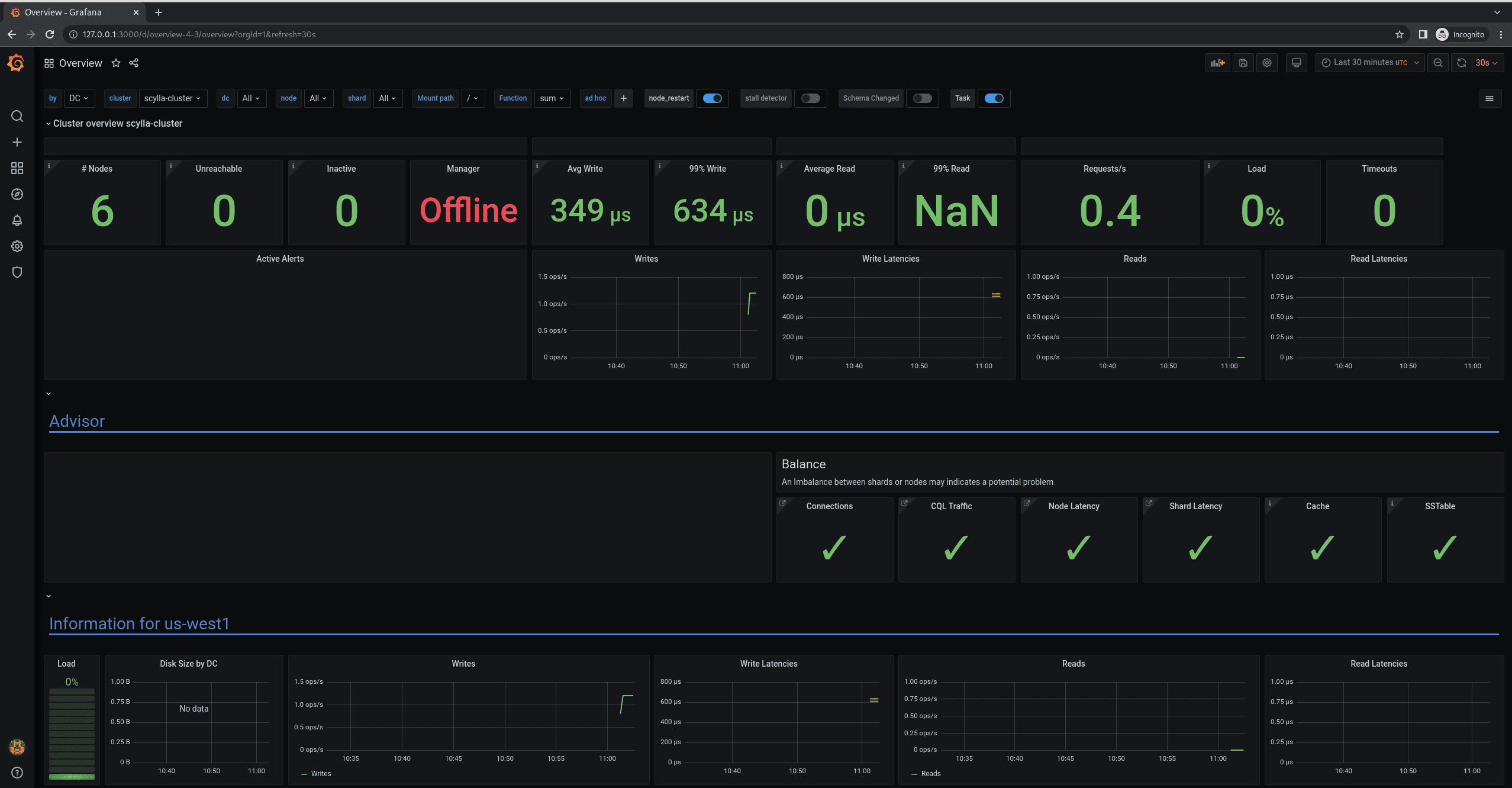

The ScyllaDB dashboards provide several views of the node, as well as query statistics. These range from simple ones like node health and workflow latency to more detailed ones. Import the ScyllaDB dashboards to Grafana:

kubectl -n scylla-monitoring create configmap scylla-dashboards --from-file=scylla-monitoring-scylla-monitoring-3.6.0/grafana/build/ver_4.3

kubectl -n scylla-monitoring patch configmap scylla-dashboards -p '{"metadata":{"labels":{"grafana_dashboard": "1"}}}'

The ScyllaDB Manager dashboards provide an overview of past and ongoing tasks like repairs and backups as well as different statistics. To import the ScyllaDB Manager dashboard to Grafana:

kubectl -n scylla-monitoring create configmap scylla-manager-dashboards --from-file=scylla-monitoring-scylla-monitoring-3.6.0/grafana/build/manager_2.2

kubectl -n scylla-monitoring patch configmap scylla-manager-dashboards -p '{"metadata":{"labels":{"grafana_dashboard": "1"}}}'

Now, install the ScyllaDB Manager, which can be used for repairs and backups:

kubectl apply -f manager.yaml

Wait until the installation is complete by waiting until all required Pods are ready:

kubectl -n scylla-manager rollout status statefulset.apps/scylla-manager-cluster-manager-dc-manager-rack

kubectl -n scylla-manager rollout status deployment.apps/scylla-manager-controller

This lab does not take a deep dive into ScyllaDB Manager, you can learn more about it in this lesson.

Now, install ScyllaDB and the ScyllaDB Manager ServiceMonitors. ServiceMonitors are used to tell Prometheus from where it should scrape the metrics. Both ScyllaDB and ScyllaDB Manager expose metrics under a specific port, and these ServiceMonitors contain this information.

Create the `scylla` namespace where you’ll install the ScyllaDB ServiceMonitor as well as the ScyllaDBCluster,

kubectl apply -f namespace.yaml

Install the ScyllaDB and the ScyllaDB Manager Service Monitors.

kubectl apply -f monitoring/scylla-service-monitor.yaml

kubectl apply -f monitoring/scylla-manager-service-monitor.yaml

Next, set up access to Grafana. Create a tunnel to port 3000 inside the Grafana pod. This command will block until the tunnel connection is established:

kubectl -n scylla-monitoring port-forward deployment.apps/monitoring-grafana 3000

To access Grafana browse to http://127.0.0.1:3000/.

Use the default user/password: admin/admin.

Next, in a new terminal tab, you’ll deploy a small cluster to benchmark the disks. You will use the results later to speed up the bootstrap.

Deploy our example ScyllaDB cluster:

kubectl apply -f cluster.yaml

Wait until ScyllaDB boots up and reports readiness to serve traffic.

kubectl -n scylla rollout status statefulset.apps/scylla-cluster-us-west1-us-west1-b

Next, save the IO benchmark results by saving the result file content from ScyllaDB Pod to ConfigMap.

Start by saving the benchmark result to the `io_properties.yaml` file:

kubectl -n scylla exec -ti scylla-cluster-us-west1-us-west1-b-0 -c scylla -- cat /etc/scylla.d/io_properties.yaml > io_properties.yaml

Then, create a ConfigMap from the `io_properties.yaml` file:

kubectl -n scylla create configmap ioproperties --from-file io_properties.yaml

Remove the previous ScyllaDB cluster and clear the PersistentVolumeClaims (PVC) to release and erase the disk:

kubectl -n scylla delete ScyllaDBCluster scylla-cluster

kubectl -n scylla delete pvc --all

Now, you’ll deploy the IO-tuned ScyllaDB cluster. Take a look at the definition in the file. IO Setup was disabled via additional ScyllaDBArgs, and the previously created ConfigMap is attached as a Volume.

Start by deploying the cluster and waiting until both racks are ready. This time ScyllaDB is using a precomputed disk benchmark, so each Pod should be ready faster:

kubectl apply -f cluster_tuned.yaml

kubectl -n scylla rollout status statefulset.apps/scylla-cluster-us-west1-us-west1-b

kubectl -n scylla rollout status statefulset.apps/scylla-cluster-us-west1-us-west1-c

Next, you are going to deploy an application that sends CQL traffic to ScyllaDB and observe what changes in the Monitoring dashboards. Create a K8s Job to execute cassandra-stress and generate CQL traffic:

kubectl apply -f cassandra-stress.yaml

Browse to Grafana (http://127.0.0.1:3000) to see metrics from the workload. The default login is admin/admin.

Click on Home, then select the Overview dashboard. You should see an increase in the load and the number of requests. You can find a summary of valuable statistics at the top of the screen. These include node health, latencies, load, requests, timeouts, etc. For more advanced users, more detailed dashboards are available in the left panel.

Once you are done, clean up the ScyllaDB cluster, clear the disks and remove the cassnadra-stress jobs:

kubectl -n scylla delete ScyllaDBCluster scylla-cluster

kubectl -n scylla delete pvc --all

kubectl delete -f cassandra-stress.yaml

Next, deploy the Alternator cluster. The difference between this and the previous one is an additional field in the ScyllaDBCluster spec, called `alternator.` You can set which port will expose the DynamoDB API (also called Alternator) as well as the default consistency level. You can learn more about Alternator here.

kubectl apply -f alternator.yaml

kubectl -n scylla rollout status statefulset.apps/scylla-cluster-us-west1-us-west1-b

kubectl -n scylla rollout status statefulset.apps/scylla-cluster-us-west1-us-west1-c

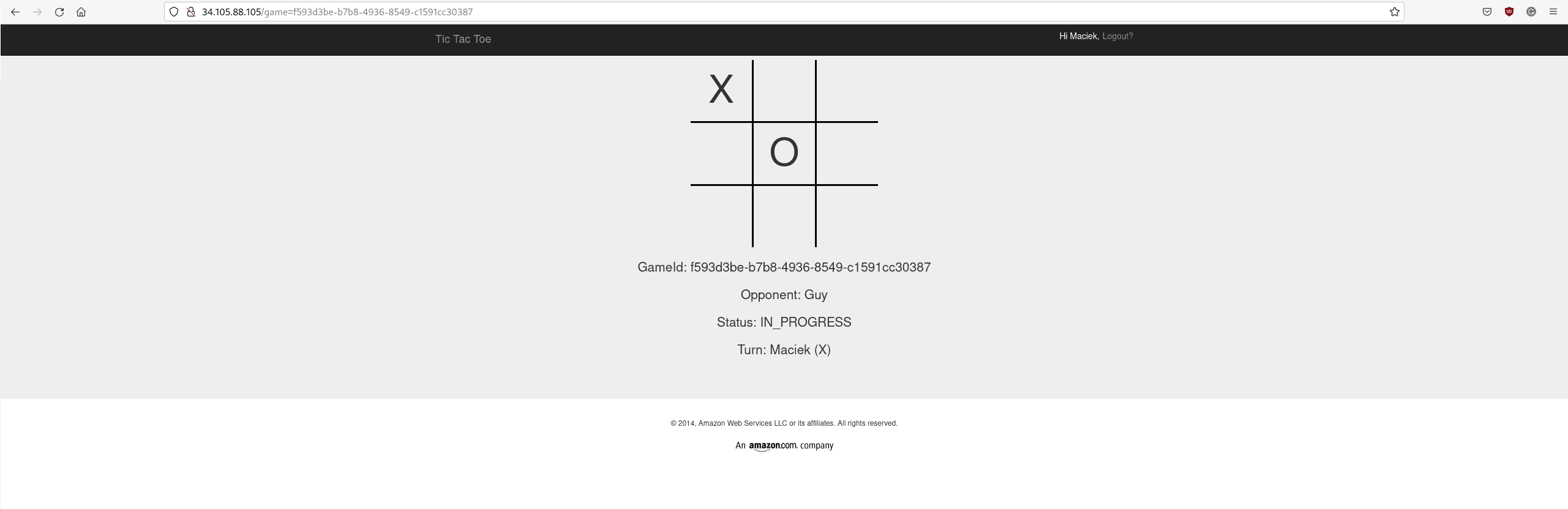

Now, you will run the TicTacToe game.

Amazon wrote this game as an example application for DynamoDB. Because the Alternator API is compatible with DynamoDB, you can run the same application without any changes, just pointing it to a different database. It won’t even notice that it’s not talking to DynamoDB:

kubectl apply -f tictactoe.yaml

kubectl -n tictactoe get svc

Check the external IP address of the game. It may take some time before it is assigned. If it isn’t assigned yet, rerun the above command.

Once ready, access the game using the external IP address.

To play the game, enter your name and click Login. Then, invite a friend (or play against yourself). Once that friend logs in, you can start playing.

Enjoy the game!

Summary

In this lesson, you learned how to install several ScyllaDB products on Kubernetes, including installing the ScyllaDB Operator for Kubernetes and its dependencies. You deployed a highly available three-node ScyllaDB cluster with two supported APIs – CQL and Alternator. You also learned how to deploy applications using both of these APIs, and you saw how to run a distributed TicTacToe game.